Tensorflow is a library that is often used with Python to solve deep learning problems. This could be object recognition in images, style transfer of images as you might know it from apps like DeepArt and Prisma or language tools that can recognize the mood of a tweet. I use Tensorflow a lot with Keras as API to try different things.

If you just want to test something quickly, you don’t have to install it, you can also use the online tool Google CoLab, there you also have the possibility to use a GPU and even for free. More about this in this blogpost “Use Google CoLab with GPU or TPU”.

On the one hand you can run Tensorflow on the CPU, which works relatively well with the usual examples like MNIST. Especially, because these are relatively simple problems consisting of an overseeable data set of ~10000 data with small 8×8 pixel images.

Installation of Tensorflow for the CPU

For the CPU you can easily install Tensorflow with “pip install tensorflow” and try all tutorials if you have enough time.

Advantage of GPU support

The advantage of a GPU supported variant of Tensorflow is that the model is calculated much faster. A NVIDIA graphics card is required!

Today I did a short test of a classification of a complex model. On the CPU I needed about 50 seconds despite Intel i7 with 8 cores.

The same model needed only 16 seconds on the GPU!

Installation of Tensorflow for the GPU

Check that you install the correct version of Cuda and cuDNN for your Tensorflow version. Otherwise Tensorflow will not start.

Tensorflow GPU Version 1.10.00 requires CudNN 7.4.2 for Cuda 9.0 and Cuda 9.0 –> https://stackoverflow.com/questions/50622525/which-tensorflow-and-cuda-version-combinations-are-compatible

1. Download & Installation of CudNN

- You need a login to download it from –> https://developer.nvidia.com/rdp/cudnn-download

- Choose CudNN 7.4.2 for Cuda 9.0 and download it

- -xtract it to f.e. “C:\cudnn-9.0-v7.4.2.24”

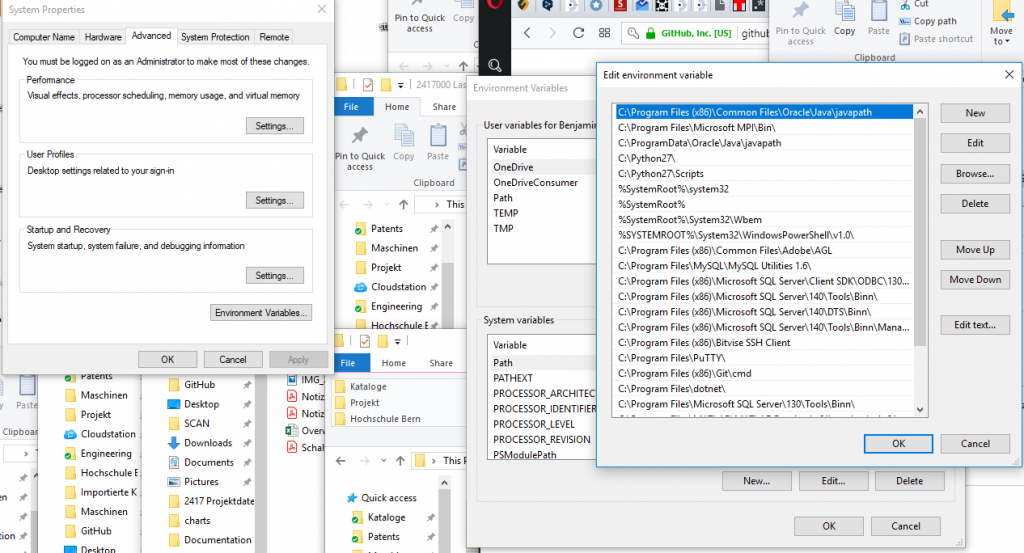

- add the following pathes to the System Environment Paths (“System –> Advanced system settings –> Environment Variables –> System variables –> Path

- –> “C:\cudnn-9.0-v7.4.2.24”

- –> “C:\cudnn-9.0-v7.4.2.24\bin”

2. Download & Installation of Cuda

- Download it from https://developer.nvidia.com/cuda-90-download-archive

- Run the exe

- optionally download all the patches and run them

3. Install Tensorflow

- tensorflow documentation

- open a command line tool

- optionally, activate your environment

- install tensorflow for gpu over pip with the following command:

pip install tensorflow-gpu4. Test your installation

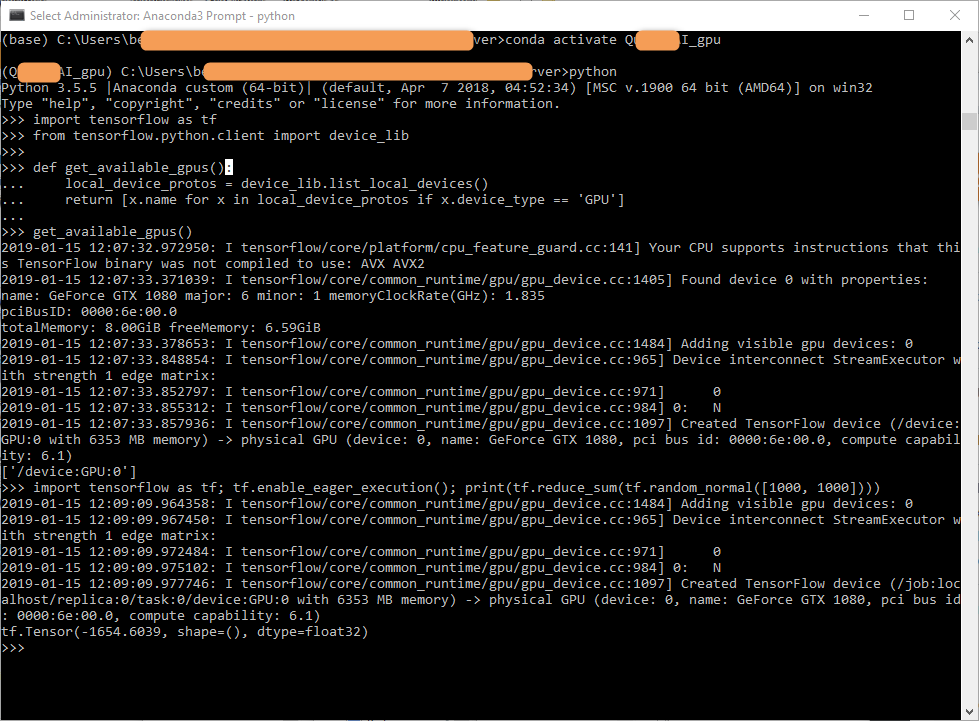

I used the following scripts to test the installatioin in python.

Start a python command line and paste the following code, which is from https://stackoverflow.com/questions/38559755/how-to-get-current-available-gpus-in-tensorflow

from tensorflow.python.client import device_lib

def get_available_gpus():

local_device_protos = device_lib.list_local_devices()

return [x.name for x in local_device_protos if x.device_type == 'GPU'] get_available_gpus()

it should return something like this … it maybe varies depending on your GPU

2019-01-15 12:07:32.972950: I tensorflow/core/platform/cpu_feature_guard.cc:141] Your CPU supports instructions that this TensorFlow binary was not compiled to use: AVX AVX2

2019-01-15 12:07:33.371039: I tensorflow/core/common_runtime/gpu/gpu_device.cc:1405] Found device 0 with properties:

name: GeForce GTX 1080 major: 6 minor: 1 memoryClockRate(GHz): 1.835

pciBusID: 0000:6e:00.0

totalMemory: 8.00GiB freeMemory: 6.59GiB

2019-01-15 12:07:33.378653: I tensorflow/core/common_runtime/gpu/gpu_device.cc:1484] Adding visible gpu devices: 0

2019-01-15 12:07:33.848854: I tensorflow/core/common_runtime/gpu/gpu_device.cc:965] Device interconnect StreamExecutor with strength 1 edge matrix:

2019-01-15 12:07:33.852797: I tensorflow/core/common_runtime/gpu/gpu_device.cc:971] 0

2019-01-15 12:07:33.855312: I tensorflow/core/common_runtime/gpu/gpu_device.cc:984] 0: N

2019-01-15 12:07:33.857936: I tensorflow/core/common_runtime/gpu/gpu_device.cc:1097] Created TensorFlow device (/device:GPU:0 with 6353 MB memory) -> physical GPU (device: 0, name: GeForce GTX 1080, pci bus id: 0000:6e:00.0, compute capability: 6.1)

['/device:GPU:0']

And from https://www.tensorflow.org/install/pip I used the following code snippet to test it

import tensorflow as tf

tf.enable_eager_execution()

print(tf.reduce_sum(tf.random_normal([1000, 1000])))alternatively you also could use this in a cmd window:

python -c "import tensorflow as tf; tf.enable_eager_execution(); print(tf.reduce_sum(tf.random_normal([1000, 1000])))"Both should output something like this:

2019-01-15 12:09:09.964358: I tensorflow/core/common_runtime/gpu/gpu_device.cc:1484] Adding visible gpu devices: 0

2019-01-15 12:09:09.967450: I tensorflow/core/common_runtime/gpu/gpu_device.cc:965] Device interconnect StreamExecutor with strength 1 edge matrix:

2019-01-15 12:09:09.972484: I tensorflow/core/common_runtime/gpu/gpu_device.cc:971] 0

2019-01-15 12:09:09.975102: I tensorflow/core/common_runtime/gpu/gpu_device.cc:984] 0: N

2019-01-15 12:09:09.977746: I tensorflow/core/common_runtime/gpu/gpu_device.cc:1097] Created TensorFlow device (/job:localhost/replica:0/task:0/device:GPU:0 with 6353 MB memory) -> physical GPU (device: 0, name: GeForce GTX 1080, pci bus id: 0000:6e:00.0, compute capability: 6.1)

tf.Tensor(-1654.6039, shape=(), dtype=float32)

Ben learnt the mechanical craft from scratch in the workshop and then as a technical merchant. He then worked for a few years as a database programmer.

Then he studied mechanical engineering.

And he has completed some CAS trainings in Data Science.

Today he develops special machines for a special machine construction company in Switzerland.

In 2014 he founded the FabLab at Winterthur, Switzerland.

He's main hobbies are his girlfriend, inventing new things, testing new gadgets, the FabLab Winti, 3d-Printing, geocaching, playing floorball, take some photos (www.belichtet.ch) and mountain biking.

Ben learnt the mechanical craft from scratch in the workshop and then as a technical merchant. He then worked for a few years as a database programmer.

Then he studied mechanical engineering.

And he has completed some CAS trainings in Data Science.

Today he develops special machines for a special machine construction company in Switzerland.

In 2014 he founded the FabLab at Winterthur, Switzerland.

He's main hobbies are his girlfriend, inventing new things, testing new gadgets, the FabLab Winti, 3d-Printing, geocaching, playing floorball, take some photos (www.belichtet.ch) and mountain biking.